Слайд 2

Agenda

Структура статьи, типичные ошибки

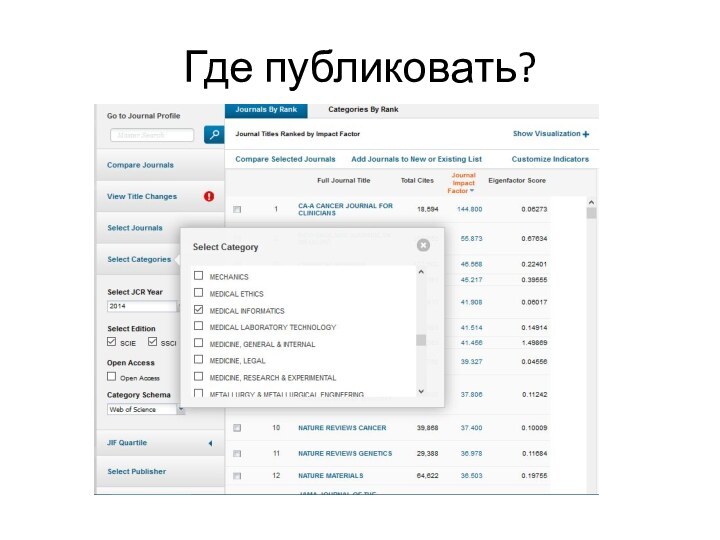

Что первично: подбор журнала или

написание статьи?

Как понять интересна ли статья мировой общественности или

только автору?

Как отличить действительно хорошую и перспективную статью?

Ответ на рецензии. Как правильно оформить ответ на рецензию? Можно ли опровергнуть мнение рецензента?

Слайд 3

О себе

Профессор кафедры программной инженерии ИК

Научные интересы:

Мед. информатика,

анализ данных, системы поддержки принятия решений, оптимизация систем управления

Слайд 5

Что и для кого мы публикуем

Предмет исследования

Цель

to exchange

the scientific knowledge

to ask and answer specific questions

Аудитория

scientists and

those interested in the subject

a publisher or an editor

Слайд 6

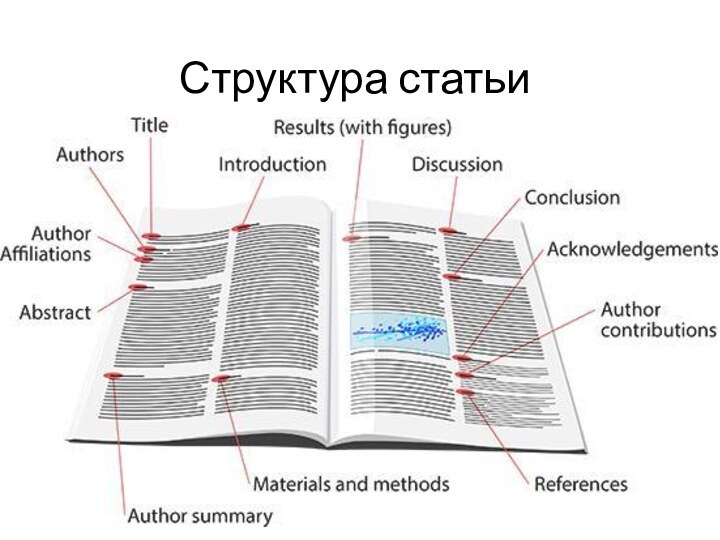

IMRaD формат

Introduction

Methods

Results and

Discussion

What problem was studied? What others

and you did? Your study area.

How do you did

it?

What did you find out?

What do your findings mean?... Combine w/conclusion/summary and future plans

Слайд 7

Introduction

Begin by introducing the reader to the state

of the art literature

Important function: establish the significance of

your current work: Why was there a need to conduct the study?

State clearly the scope and objectives. If possible, specify hypothesis

The introduction can finish with the statement of objectives

Слайд 8

Materials and Methods

Main purpose: provide enough detail for

competent worker to repeat study and reproduce results

Equipment and

materials available off the shelf should be described exactly

Sources of materials should be given if there is variation in quality among supplies.

Modifications to equipment or equipment constructed specifically be carefully described.

Usual order of presentation of methods is chronological

Be precise in describing measurements and include errors of measurement

Слайд 9

Results

In the results section you present your findings:

display items (figures and tables) are central in this

section

Present the data, digested and condensed, with important trends extracted and described.

Results comprise the new knowledge that you are contributing to the world – hence, it is important that your findings be clearly and simply stated.

Combine the use of text, tables and figures to condense data and highlight trends.

Слайд 10

Discussion

Now its time to interpret your results:

Do they support your hypothesis?

Are they in line with

other published studies?

What do they imply for research and policy making?

Is that supported by your results?

Are other interpretations possible?

What are shortcoming of your study?

How could you improve your study?

Слайд 11

Discussion – typical structure

Statements of principal findings

Strengths and

weaknesses of your study

Differences to other studies

Implications of your

study for research or policy making

Open questions and future research

Слайд 12

Conclusions – typical content

Very brief revisit of the

most important findings with a focus on the advance

behind the state of the art

Final judgment on the importance and significance of the findings with respect to implications and impact

Suggested further research

Suggested policy changes

Слайд 14

Как не нужно писать

Вы сделали что-то впервые?

Вы первый,

кто занимается этой темой?

У вашего исследования нет аналогов?

http://www.nobelprize.org/

Слайд 15

Основные ошибки

Ссылаться только на себя или научного руководителя

+ классический учебник 1953 года

Не сравнивать свои результаты с

существующими исследованиями

Писать, что результаты уникальны

Повторять чужие мысли, не ссылаясь

Слайд 16

Introduction – common mistakes

A common mistake is to

introduce authors and their areas of study in general

terms without mention of their major findings

Avoid writing “we did it because we could”

Avoid a list of points or bullets; use prose

Слайд 17

Results – common mistakes

The results should be short

and sweet. Do not say "It is clearly evident

from Fig. 1 that bird species richness increased with habitat complexity". Say instead "Bird species richness increased with habitat complexity (Fig. 1)". •

However, don't be too concise. Readers cannot be expected to extract important trends from the data unaided.

Слайд 18

Discussion – common mistakes

Discussion unrelated to the results

Bad

structure which meanders back and forth

Missing comparison of your

results to results from the literature

Слайд 19

Conclusions – common mistakes

Copy and paste from other

parts of the paper

Treat it as a summary

Слайд 20

Avoid l o n g convoluted sentences

"It is

shown that area-averaged precipitation in “NoName” River basin was

evaluated with Thiessen Polygon method for the 1980 – 1996 period and estimated with the arithmetic average for the 1963 – 1998 period and it is shown present high variability at various temporal scales.“

“Space-time variability in water availability will be study as function of changes (mean and dispersion) in daily, monthly and yearly amounts of precipitation, characteristics of intense precipitation and drought episodes in the region, as well as function of different background scenario conditions caused by wildfires.”

Слайд 21

Что первично: подбор журнала или написание статьи?

Слайд 22

What problem was studied?

What others and you

did?

Your study area

How do you did it?

What did

you find out?

What do your findings mean?... Combine w/conclusion/summary and future plans

Слайд 25

Как понять интересна ли статья мировой общественности или

только автору?

Как отличить действительно хорошую и перспективную статью?

Слайд 26

Хорошая статья

Has exactly one, specific, thing to

communicate

a description of your new theory

an analysis of how

a known theory can be applied to a new situation

a proof of concept

a re-analysis of someone else's work, or a replication with/without minor variations

an investigation of how a particular approach performs against a standard benchmark

a comparison between two approaches (maybe one of which is the author's own)

Слайд 27

О чем писать статью

О том, что вам интересно

О

том, что заботит научное сообщество

О том, в чем вы

разбираетесь

Не забудьте обсудить результаты

Слайд 28

Что читать

Sciencedirect

Pubmed

WOS

Scopus

Читайте те журналы, где хотите публиковаться

Слайд 29

Ответ на рецензии. Как правильно оформить ответ на

рецензию? можно ли опровергнуть мнение рецензента?

Слайд 30

Good practices

Take your time to respond to the

referees questions - The response to the referees letter

is quite often longer than the submitted manuscript

Make it easy for referees to detect changes and to tick their list

Only argue with the referee if absolutely necessary - If the referees did not get your point you might have to express it differently. - If you disagree with the referees try bring this up in the discussion.

Be polite - Remember, the referees are doing you a favor.

Слайд 31

Общение с журналом

Reviewer: 1 Comments to Author: Please

provide sufficient comments to the author(s) to justify your

recommendation. General remarks: The paper addresses the semantic interoperability issues in regional healthcare networks demonstrated at the Bavarian GO IN net established in the city of Ingolstadt. It is based on an analysis of communication requirements expressed by the involved medical professionals including the data set and its structure. The analysis has been performed using a questionnaire as well as a small number of direct visits combined with observations and interviews. The solution proposed is a data set derived from the CCR specification and adapted to the German needs. This data set is represented by archetypes using and adapting existing archetypes or defining new ones. The described method is technically sound. However, the paper suffers from the lack of a sophisticated scientific methodology, defining the objectives independently of a favored solution, considering alternative solutions, discussing the pros and cons, also comparing the own solutions with the work others. When going for a certain solutions supported by a minority, mainstream alternatives should be represented, discussed and the selected solution objectively reasoned. This comment doesn’t means that the mainstream is always right, but requires arguments against. Most countries around the globe implement CDA-based solutions for semantic interoperability, thereby reusing a bunch of existing and standardized solutions. Also the epSOS project referenced several times is based on that approach developed by HL7/IHE. The papers wording could be improved; an English punctuation would improve the readability of the text. Furthermore, the very few spelling errors have to be eliminated. The reference list mainly focuses on recent papers, usually not reflecting the ideas origin. While this wouldn’t be acceptable for a review article, it might be tolerable for an implementation study as presented here. Detailed Comments: Introduction (page 1, line 2): The authors claim that EHR provides links to medical knowledge. Most of the existing EHR systems don’t provide this facility despite this would very desirable. Introduction (page 1, line 3): The authors claim that EHR provides greater security. Fact is that the security and privacy challenges of EHR systems are higher than the paper based solutions. Introduction (page 1, line 8): There are no owner of requirements but experts and documenters defining them. Introduction (page 1, line 18): A common data set provides syntactic interoperability. For semantic interoperability, the harmonization of the underlying concepts is essential. On the basis of shared knowledge, semantic interoperability of possible even if the data set is not the same. Of course, a common data set eases the endeavour for semantic interoperability. In international affairs and cross-cultural communications, such constraints are sometimes not feasible. Introduction (page 2, line 2ff): While BDT is an EDI protocol to exchange medical data, all referenced examples go beyond data exchange. Introduction (page 2, line 4ff): Based on the VHitG Doctors’ letter, implementation guides have been derived according to the CDA methodology, clearly stating those definitions. Introduction (page 2, line 16ff): The widely implemented solutions do not represent CCR but CCD, i.e. the representation of CCR through CDA. Methods (page 3, line 9): A data set doesn’t have requirements. Results (page 4, 3rd last line): Does the archives contain the medical documents as scans (images) or as reconstructed (e.g. OCR analysis) text documents. Results (page 5, line 17ff): Unnecessary repetition from page 4. Results (page 6, figure 2): This figure represents the social aspect of interoperability based on common interest and willingness (BB ref?). It expresses the common attitude “I need everything but my partner needs nothing”. Results (page 6, line 8): Providing a number (ratio) might be of interest. Results (page 8, line 10ff): Most of the addressed information is available in standards from HL7, IHE, ISO/IEC and IEEE. Results (page 9, line 4ff): Should perhaps be moved to the discussion section. Results (page 10, line 3): Replace “adding” by “establishing” or “forming”. Conclusion (page 12, line 17): The European epSOS project has agreed on an HL7/IHE approach based on CDA. Reviewer: 2 Comments to Author: Please provide sufficient comments to the author(s) to justify your recommendation. The authors describe a dataset that was developed through a series of interviews and surveys with physicians distributed across 480 practices in Bavaria, Germany. The work conforms to the ISO 13606 archetype model and Continuity of Care Record standards, which provide the basis for interoperable electronic health record data exchange. The dataset is a first step towards a larger effort to establish a data exchange methodology that will consist of a communication server aimed at creating semantic interoperability between heterogeneous electronic health records systems. This framework promises to extend existing archetypes to meet the needs of ByMedConnect. The introduction section develops the argument that obstacles to advancing electronic health records are primarily due to human factors, but neglects to identify technological difficulties (i.e., drug interaction knowledge-bases, decision support systems, etc.). Although it is necessary to address socio-technical issues, it would be good to see a more balanced perspective in the introductory review. As well, the logic within the introductory section does not follow – for example, the reference that describes positive acceptance measures highlights what physicians look for, but it does not indicate how this relates to interference with workflow. Semantic interoperability refers to systems with heterogeneous data schemas that can seamlessly exchange information stored in any compliant systems. The authors describe semantic interoperability in terms of a common data set, which does not contain the semantics of the data model itself. The key to semantic interoperability is that the items in different data schemas map to common concepts that are defined within a semantic model, such as an ontology. It is possible that the archetype model defines the semantics; however, there has been extensive work in the semantic interoperability domain using UMLS, SNOMED, HL7, etc., and there is little acknowledgement or review of these efforts by the authors. Although developing standards are critical for local data exchange, it is also necessary to reuse standards and not reinvent the wheel. In the methods section the authors discuss the observations completed for their research. The observations included interviews and surveys with doctors from 480 practices, but there is no mention of how many doctors were actually interviewed or surveyed. As well, there is no mention about the nature of the survey in terms of the questions asked and if they were open ended, semi-structured, or likert-scaled, etc. The results reported in the Doctors’ Network section reports a number of percentages but do not report the specific number of doctors interviewed. There needs to be a description of “N” or the number of doctors that reported using each of the practice management systems. There also needs to be a table that details the practice management systems, particularly the 17 systems that have no name reported. In order to exchange data between 21+ practice management systems you need to have a clear catalog of the schema for each system and it is impossible to evaluate the quality of a data exchange data schema when there is an incomplete description of the systems being used. The figures in the article are incomplete and difficult to understand. Figure 1 does not exist and is not present in the article. Figure 2 does not have a clear key and does not indicate how many doctors/practices are represented, and it is not clear what the two “keys” represent (i.e., requested vs. the other likert scale). Figure 3 does not include labels for the arrows and it is unclear what the flow of information is, and the screen shots of the forms are too low of resolution to be useful in the diagram – the figure would benefit from a more clear description of the flow of information and specific use case that walks the user through this very complex and confusing diagram. Finally, when the CCR record was verified – what was the measure of statistical significance? Developing a standard for data exchange within a local set of clinics is a commendable activity. The reuse of standards such as ISO 13606 archetype model and Continuity of Care Record will help in wider spread interoperability with clinics outside this specific location, however, the notion of semantic interoperability does not conform to the same definition as proposed by the semantic web that moves beyond data sets and into the semantic modeling of concepts or archetypes and the relationships between them. The authors need to report the number of surveys that were filled out and the statistics that were used to evaluate the significant differences between information categories, as well as basic demographics about who filled out the survey (i.e., was it a whole clinic, specific physician, male or females, etc.). A more through review and reporting of the surveys conducted would be invaluable to the clinical informatics community and to the investigators of this study. <...> Reviewer: 3 Comments to Author: Please provide sufficient comments to the author(s) to justify your recommendation. It is important to realize doctors’ positive attitude to promote EMR or EHR, and this is the main reason to conduct questionnairesfor doctors beforehand tocreate archetypes in this paper. It is not clear whether you canwork out these problems of doctors’ reluctance or not however. The conclusion is you justtried to create the archetypes together with doctors to avoidproblems but you showno specific outcome,so did it really change the situation by doing that? Are there any positive structures of archetypes obtained by asking the doctors’ desires or concepts?You mentioned that you adapted many doctors’concepts in your dataset, though I could not realizespecific doctors’ desires and concepts in this dataset structure. What are the differences in comparisonwith other archetypes without interaction of doctors?You should show some examples to elucidate the specific relations and make it clear because it is your main objective. The structure of this paper needs to be improved.You have put your discussion after the conclusion, though discussions are almost always located before the conclusions. Some parts of results should be written in methods. You should make the contents of methods and results more coherently and write less narratively because this is anoriginalscientific article.References must be written according to the instructions to authors demonstrated in MIM. If possible, you could cite articles from MIM, too.

Слайд 32

Dear reviewers,

Thank you very much for your

valuable comments that helped to improve the manuscript. Please

see below the answers to the comments that you made. Answers to the reviewer one are marked blue in the text. Answers to the reviwer 2 are marked green.

Reviewer #1: Evaluation team included persons from Clinic in Munich and clinic in Tomsk, and Siberian medical university. Additionally there were persons involved from UMSSoft, the company that had delivered the EHR system. This raises questions about the neutrality of these people? Involvement of company people requires more detailed information: why they were involved, how they were involved and how neutrality apsects were handled?

To preserve the neutrality of the company it was responsible only for technical part of the study. They set up the infrastructure and took care that everything worked properly on the technical side. No people from the company were involved or had access to the evaluation tasks definition and data collection.

Evaluation criteria are presented in fig 5 and fig 6. Usability is one of the criteria, however, it is only studied from learnability perspective, and in connection to patients. Why not for physicians? Why the other aspect of usabilityt were not included? Like ease of use, memorability, efficiency of use, error frequency and their severity. This aspect should ne more elaborated in the revision.

We actually studied the usability of the system in regards of the doctors. The figures 5 and 6 did not contain this because we mistakenly did not want to confuse reader by putting usability as a part of usability. In this revision we have reworked this. We added another figure (10) and reworked figure 11 to emphasize the evaluation of usability.

Usability

The metrics for learnability were derived from the Data accessibility and Doctors’ performance criteria. The measurements were performed during the evaluation of the Data accessibility and Doctors’ performance.

Metrics

1. Number of mistakes doctors made during the completion of tasks;

2. Number of mistakes doctors made during the completion of tasks;

3. Level of users’ satisfaction

Evaluation process

The evaluation process was based on the evaluation of the metrics (that are evaluated within other criteria) several times, comparing the progress of the users. We also rated the mistakes to evaluate how critical they are to complete the task. The mistakes were rated as follows:

• Minor: caused a time delay or an extra operation required to complete the task

• Medium: caused a time delay and required to start the task from the beginning.

• Major: caused a misinterpretation of data or using a wrong data during the process.

To evaluate the level of satisfaction we asked users to estimate their level of satisfaction using 1-10 scale.

Also in the revision, the applied methodology and its pros and cons in this study should be discussed/elaborated in the discussion and conclusion. Was the approach suitable, what were the benefits, or drawbacks, in applying this methodology.

The evaluation approach that we applied to study the efficiency of the data visualization method showed its flexibility and adaptability to different evaluation tasks. The approach is very scalable and can be applied both for the whole EHR infrastructure and for the certain aspect of the EHR (as in the presented study). On the other hand it is too generic and did not provide any evaluation methods or metrics. So it can serve as a skeleton and requires a very detailed specification of evaluation criteria, metrics, data acquisition and analysis methods.

Reviewer #2:

The abstract of the manuscript reports that "The objective of this evaluation study is to assess a method for standard based medical data visualization." Fulfillment of this objective requires 2 components -- a description of the data visualization method, and a description and results of the evaluation. The text of the article is replete with evaluation methods, but provides no description of the visualization method. The reader has no notion of the visualization method that is being evaluated, and how it applies to and used by doctors or patients. The data description is given as ISO 13606 archetype based medical data which I know is a standard for interoperability, but does not, by itself, imply any specific type of data or a specific mode of visualization.

ISO 13606 Archetypes are hierarchical structures and support an XPath-like definition to access substructures [21, 22]. An XML schema for visual medical concepts was developed considering the archetype model of ISO 13606 to ensure a full compatibility with archetypes. Each visual medical concept is stored as XML file.

A visual medical concept is logically divided into three main sections: metadata, visual content and visual layout. The metadata section specifies the properties of the VMC. The visual content section defines the data fields that are included in the VMC. The data fields are derived from different archetypes and combined into visual groups. Visual groups are processed as one entity when the GUI is being built. The visual layout section specifies the presentation properties of the GUI elements. The VMC allows specifying the user groups and the media for each element.

The XSL templates provide a platform-independent description of the actual display of the visual concept, for example, an HTML page. Templates implement the concept of different views on the same data.

The visual model is based on the archetype model of the ISO 13606. On the instance level there are archetypes and visual medical concepts that contain the corresponding presentation options. Visual medical concepts add a detailed description of visual properties of each archetype data field. A VMC combines and organizes data fields of an archetype into visual documents. In our projects we used XML Schema to define the archetypes instead of ADL definitions [20]. On the data level VMC files with specified content and presentation properties are associated with corresponding XML data files. This combination is used by the visual templates to build the user interface. To present the medical document an EHR System applies a predefined XSL Template. The template analyses the visual medical concept file and defines the following parameters:

Visual document content;

Data source files;

Data fields;

Visual groups;

Visual document layout;

Presentation type (diagram, table, etc.);

Users and devices that this view is available for.

XSL templates are used in the proof of the concept application and are not the part of the developed information model.

What type of data is being visualized -- Labs, Vital signs, medication use, visit activity, or perhaps some combination of all of them?

We used the following dataset in the evaluation study:

For the patients:

Laboratory tests results

Vital Signs

We also added visit activities for the doctors in the Doctor’s performance tests. The data that was used for each task is presented in the evaluation process section of each metrics.

On page 10, the text finally mentions two of the components of the EHR -- 1. Web-based appointment system, 2. Web-based access to the personal medical data, but again no details of the visualization are provided. On pages 5 and 6, A reference (15) is provided for is provided for a visual medical concept (VMC), but the detail provided is not enough to allow the reader to understand the fundamentals of this visualization approach and why it may be better than others.

We added the description of the visualization approach in the Object of Evaluation section.

The evaluation methods are quite detailed, and seem to follow a motif of Assessment Processes and Metrics. THis information may be better presented in a summary table to enable better comparisons of the differences in approaches.

The choice of information displayed on the test subjects is puzzling, with only prior EHR experience and specialty for the doctors and seemingly superfluous ID numbers for the patients.

We reworked the table removing the IDs

The axes, particularly the x-axis in Figure 7 requires a label.

We added the axes in Figure 7 (see in the manuscript)

Similarly it is not clear what the x-axis is in figure 8, and why there are no bars over the "5" on the x axis.

We added the axes in Figure 8 (see the manuscript). There should not be any bars over “5” it was corrected.

In figure 9, the doctors are represented by different colored bars that are grouped over an x-axis with numbers from 1 to 5, and again, it is not clear what the numbers 1 through 5 represent. In Figure 8, the number may present the doctor IDs, but in figure 9, it is clearly not the case. Perhaps the x axis numbers in figure 9 represent a time dimension, but the text describing figure 9 says "Doctors from both groups demonstrated better performance working with the solution." but I do not see hoe figure 9 shows a comparison of "the solution" with something else (presumably paper). From the title of figure 9, I presume it is showing the speed with which doctors did something, and perhaps that speed was improving over time, but this exercise requires too much guesswork on the part of the reader.

The testing day one demonstrates the performance using the paper based process for the doctors who did not use EHR system before (Doctors’ IDs 1,2,4) and native EHR for the experienced doctors (Doctors’ IDs 3 and 5).

We also added the axes in Figure 9 (see in the manuscript).

Figures 10 and 11 seem to imply a time dimension in the x axis and a y axis that shows improvement in satisfaction and efficiency. Despite the earlier text describing the assessment of efficiency, I see no indication of how the scale on the y axis of figure 11 is derived, and how significant the changes are.

We added axes to the mentioned figures and added a text description

The solution has also proved to provide efficient modeling functionality. The performance shown by doctors has reached the expert level both in wizard and manual modes (figure 12). As the expert level time is a time that the developer of the system showed the fact that doctors reached this performance demonstrates the usability and efficiency of the system.

Слайд 34

Управление

Исследования

Принятие решений

Персонифицированность экспертных знаний

Неполнота знаний предметной области

Непроработанность стандартов

Разнородность

систем

Региональная статистика

Стандарты лечения

Содержат нечеткость

Слайд 35

Проекты

Интеллектуальная информационная система мониторинга качества процесса оказания медицинской

помощи и прогнозирования страховых выплат в рамках программы обязательного

медицинского страхования

Разработка технологии нормализации, обмена и анализа медицинских данных на основе международных стандартов.

Интеллектуальная экспертная телемедицинская система эффективной автономной генерации врачебных заключений на основе результатов лабораторных исследований пациента.

Слайд 36

Спасибо

georgy.kopanitsa@gmail.com

Слайд 39

Как поучаствовать в проекте

Что Вы можете предложить?

Начните с

малого

Действуйте!

Слайд 40

Варианты кооперации

Совместный грант

Работа в лаборатории

Слайд 41

где искать партнеров и гранты

https://www.researchgate.net/

https://www.daad.de

Слайд 43

Зачем?

Отличное начало научной карьеры

Благосклонность работодателей

Интересно и весело

Слайд 44

Процесс защиты

По совокупности трудов

Диссертация

2 оппонента 3-4 месяца

Совет

3-5 профессоров

3-4 месяца на утверждение защиты