spend time towards end of semester – more details

onsimulators in a few weeks

Grading:

50% project

20% multi-thread programming assignments

10% paper critiques

20% take-home final

FindSlide.org - это сайт презентаций, докладов, шаблонов в формате PowerPoint.

Email: Нажмите что бы посмотреть

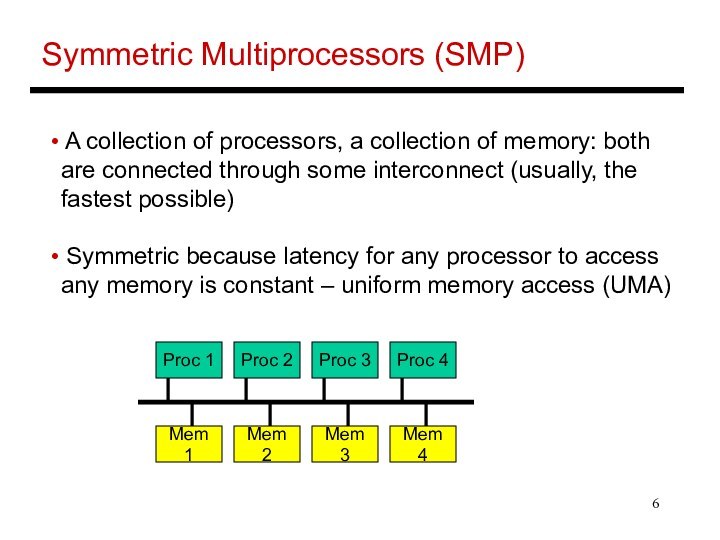

Proc 1

Proc 2

Proc 3

Proc 4

Mem 1

Mem 2

Mem 3

Mem 4

Proc 1

Mem 1

Proc 2

Mem 2

Proc 3

Mem 3

Proc 4

Mem 4